AI-Detection Tools Often Get It Wrong

Are teachers risking students' grades with unreliable technology?

Let's chat about AI writing detection tools – the ones sorting through content to figure out if it's human-made or crafted by a sneaky AI.

These tools act like guards against AI-made content, but surprise! They’re not much better than flipping a coin. And here’s the kicker – it’s getting even trickier!

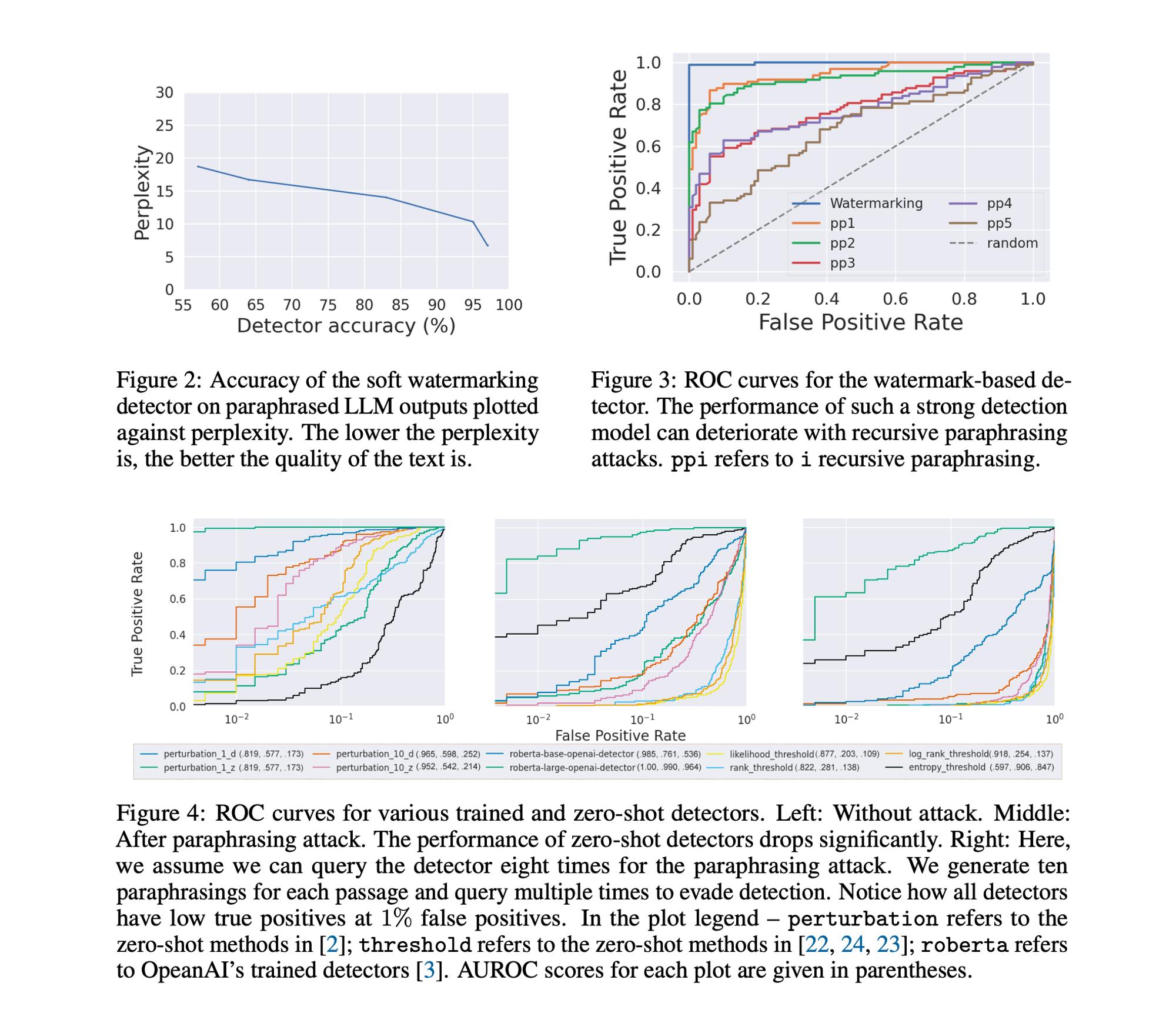

Paraphrasing is like AI slipping into a disguise, swapping words while keeping the same meaning [1]. Even the fanciest detectors stumble when things start to blur.

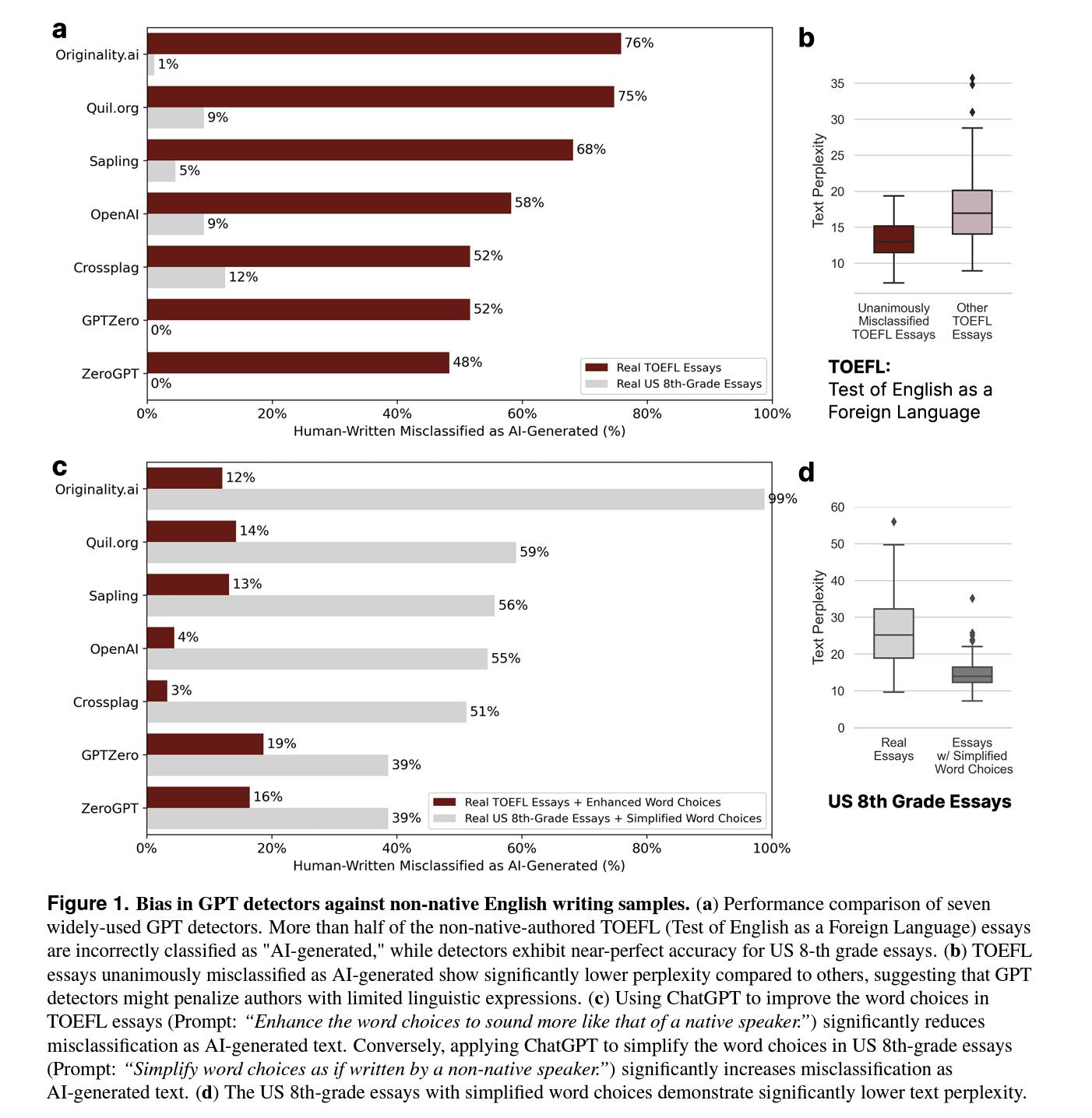

But here’s where it stings, particularly for non-native English speakers. These detectors tend to give them a hard time, mistaking their writing for AI-generated content [2]. While native speakers breeze through, these detectors have a tougher time understanding the unique way others express themselves. It’s like having your heartfelt song mistaken for a robotic tune.

Lastly, these detection tools, designed to be fair judges, are becoming less reliable as AI models like GPT are getting really good at mimicking human writing by the hour [1].

So where do we stand? The key takeaway here is that blindly trusting flawed AI text detectors is problematic, especially for evaluating student essays. They need major upgrades to keep pace with AI's tricks. Relying on them, especially for student essays, could lead us astray. As AI continuously outsmarts these supposedly "fair" judges, let's not bank entirely on their judgment.

So, what’s your take on AI-detecting tools as a student or a professional? Let’s discuss it if you have any opinions!

References

[1] Can AI-Generated Text be Reliably Detected?

https://arxiv.org/abs/2303.11156

[2] GPT detectors are biased against non-native English writers